Company Alerts from Twitter

So hot on the heals of our article on using movements in Twitter sentiment and volume as a pre-cursor to Bitcoin price movements.

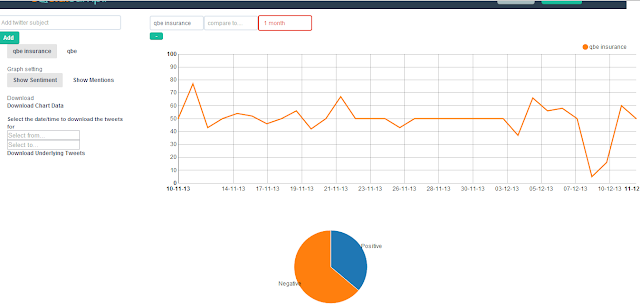

Now we're excited to announce we've set up automated alerts for any subject which will be constantly monitored for spikes in sentiment and volume. Check out QBE Insurance from Australia which issued a profit warning on the 9th of December.

Here's the sentiment:

And here's the mention volume:

As with the Bitcoin scenario, a very nice correlation to the bad news which came out on the 9th of December.

How it all works

- We configure the search criteria to ensure only the relevant tweets are extracted

- SocialSamplr will figure out an average sentiment and mention count for a given subject over a default time-frame of a month (can be configured to any value).

- The average will then be broken down to a default time-frame of 5 minutes (again this will be fully configurable - for example lower volume subjects would have a longer time-frame than 5 minutes so there's enough time for the tweet volume to be significant).

- If a tolerance level for tweet volume (default of 100%) or sentiment (default of 30%) for the 10 minute period is breached an alert is sent. Once again, the tolerance levels will be fully configurable.

- Once an alert is sent the application will then go into a "silent" period where no more alerts will be sent for a configurable period of time (like 24 hours).

- Once the "silent" period has ended the application will calculate the sentiment/mention average during this period.

- If it's significantly different than during the last month's (default over 25%) average the application will then use this as the new "baseline" average.

- Otherwise it will revert back to using the monthly average as above.

If anyone wants to be an early-adopter for this exciting new functionality (and hence get to use it for free) just get in touch. Remember, we have access to every tweet so you miss nothing!